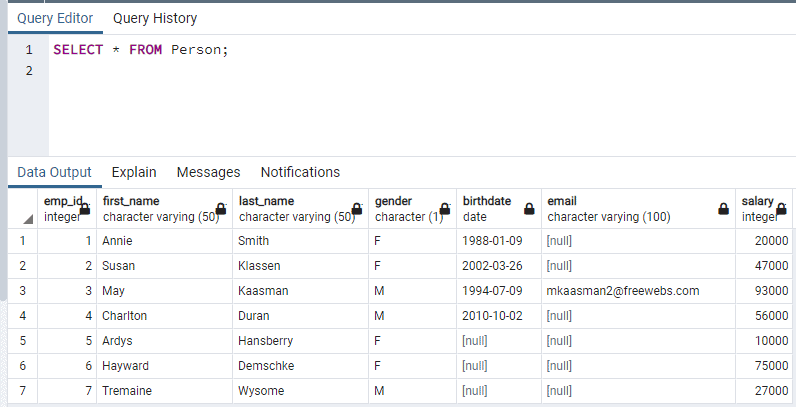

copy (select name, email from users where age > 40) To only dump the name and email of users over the age of 40, we alter our select statement to select only the columns we want. But I do not want to copy anything else other than data. I noticed the following command: pgdump -C -h localhost -U localuser dbname psql -h remotehost -U remoteuser dbname. But I need to transfer ONLY DATA from one server to another.

#Postgres copy table update#

Instead, we update the query so we're only returning the columns we want. I see that there are solutions for copying tables from one Postgres SQL server to another. One thing to note when we're using a query to get the data from our table, is that we can't pass the column name args after the query. This time, you can see that we only have the two users that satisfied our query conditions. With the copy command finished, we can cat our CSV file again. copy (select * from users where age > 40)

In this example, let's only dump users over the age of 40. To do this, we wrap our query in parentheses and pass it as the first argument to the copy command. The copy command also supports queries on table being dumped. Now if we cat our CSV again, we should see that we only have the name and email from our users table. 3,980 4 41 66 try psql>\copy 'TableName' FROM '/home/MyUser/data/TableName. In this case, let's just select name and email from our users. If we only want specific columns from our table, we can pass them as a comma separated list after our table name. This time when we cat our output file, we can see that the headers have been added to the top. To include headers in the generated csv file, you can pass "with csv header" to the copy command after specifying the output file path.

You'll notice that our CSV file doesn't have headers. Once the copy command has finished running, we can cat the newly generated file. Let's dump this data into a CSV using the copy command. If we select from the table we can see that we have a few rows of data. We can see from inspecting the users table that we have a few columns. In this example, we're using a dummy database with a table called users. The copy command expects a table as an argument, followed by the absolute path for the outputted csv file. In other words, I don't want to have to go in and manually drop table attendees cascade before running the restore.We can generate a CSV from table data in Postgres using the copy command. I'm trying to do this using just pg_dump/pg_restore with no editing of the server db between running the two commands. Pg_restore: COPY failed for table "attendees": ERROR: duplicate key value violates unique constraint "attendees_pkey" Pg_restore: Error from TOC entry 2409 0 56001 TABLE DATA attendees can be used to dump all of the rows in an inheritance hierarchy, partitioned table, or view. For example, COPY table TO copies the same rows as SELECT FROM ONLY table. Results in this error: pg_restore: Error while PROCESSING TOC: COPY TO can be used only with plain tables, not views, and does not copy rows from child tables or child partitions.

I have also tried using the -clean and -data-only options, but what always gets me when I try to restore is the constraint on the table. I have tried various forms of pg_dump, using both plain-text and binary formats. How can I do this when the table has a constraint and already exists in both databases? I need to rewrite the data in the table on my server with the data in the table on my development computer. I need to copy refid1 from table1 TO the column refid2 in the table2 the two things matching will be : id (same column name), aref1 & bref1.

0 kommentar(er)

0 kommentar(er)